Building the data infrastructure for spatially aware AI

A modular data engine and infrastructure stack to create, simulate, and deploy real-world AI applications

We help AI perceive and interact with the physical world.

Trusted by research teams at the most advanced AI companies—building models that perceive, reason, and act in the physical world.

What we're building

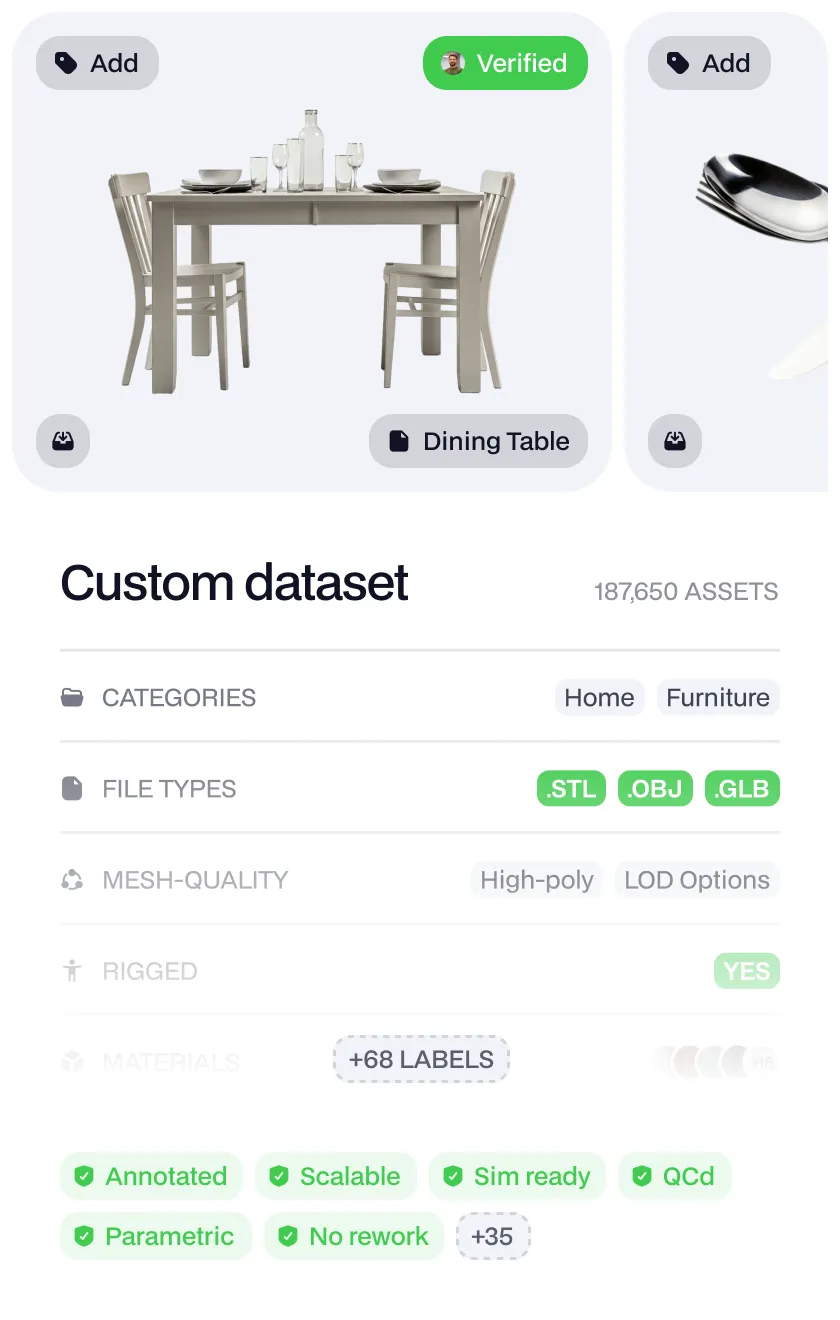

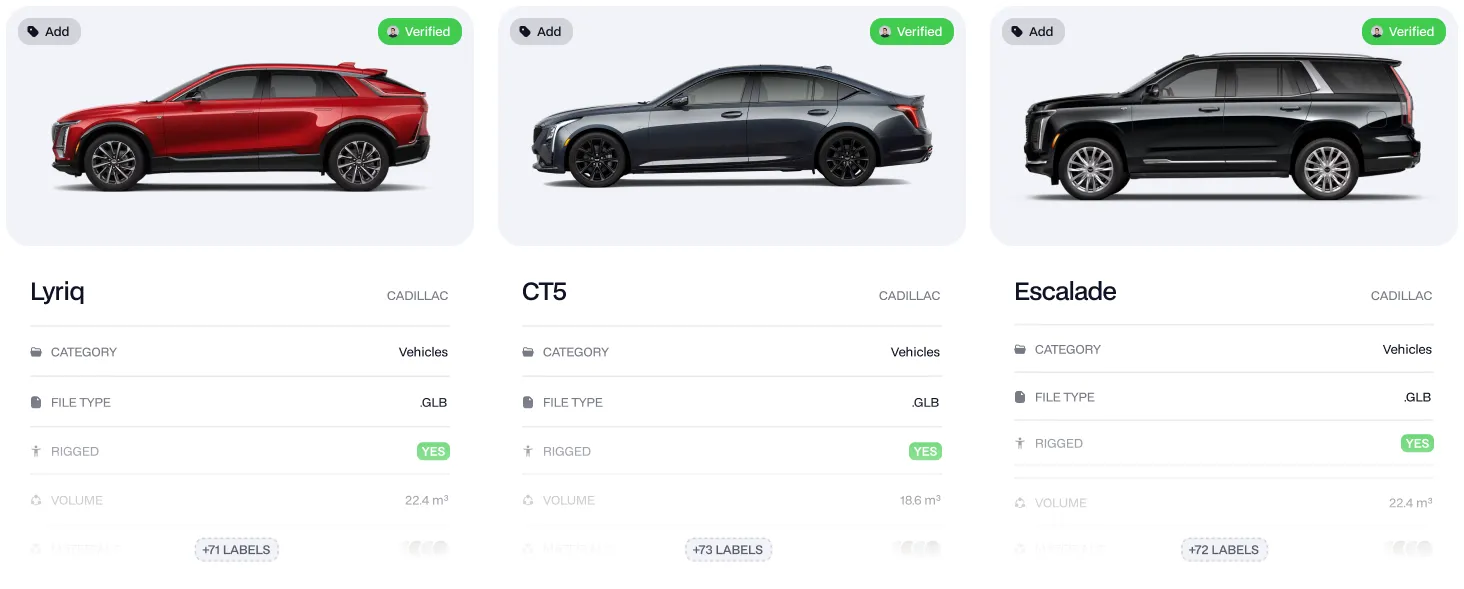

A simple way to request, explore, and export datasets.

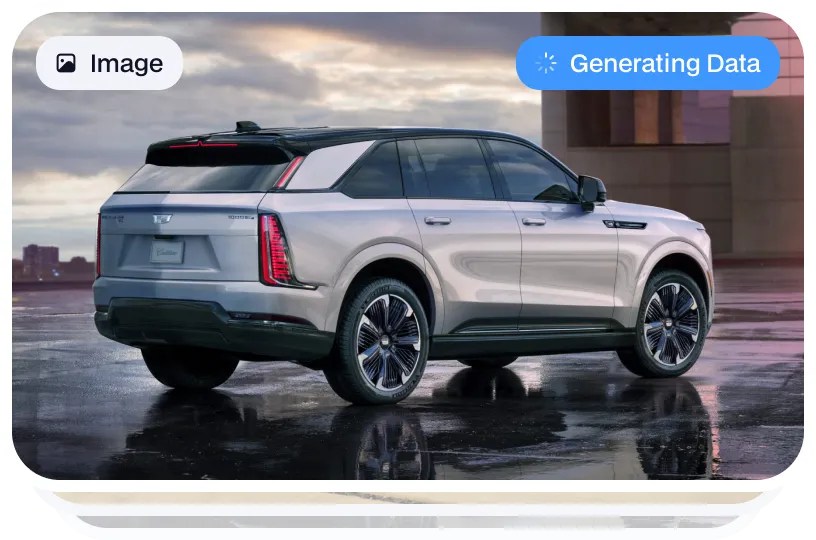

PhyAI offers a user-friendly interface and a powerful API to generate labeled visual assets on demand — including 3D scenes, models, images, videos, and more.

Procedural generation meets deep annotation.

Our engine creates unique, photorealistic assets — each annotated down to the object, pixel, and relation.

Render, label, and export millions of assets per day

PhyAI runs on a distributed rendering and labeling backend, optimized for high-throughput, auto-labeled dataset generation.

Real-world complexity, synthetic control.

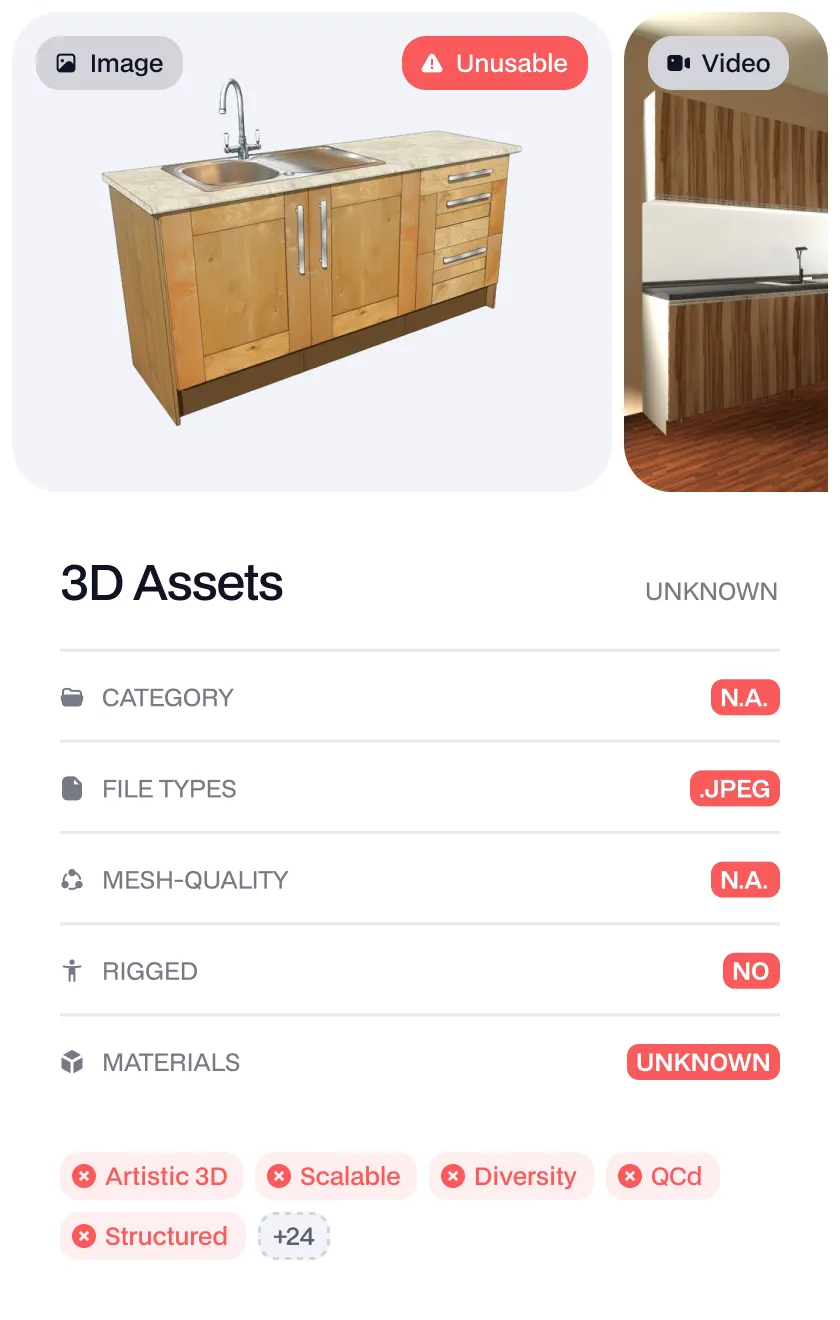

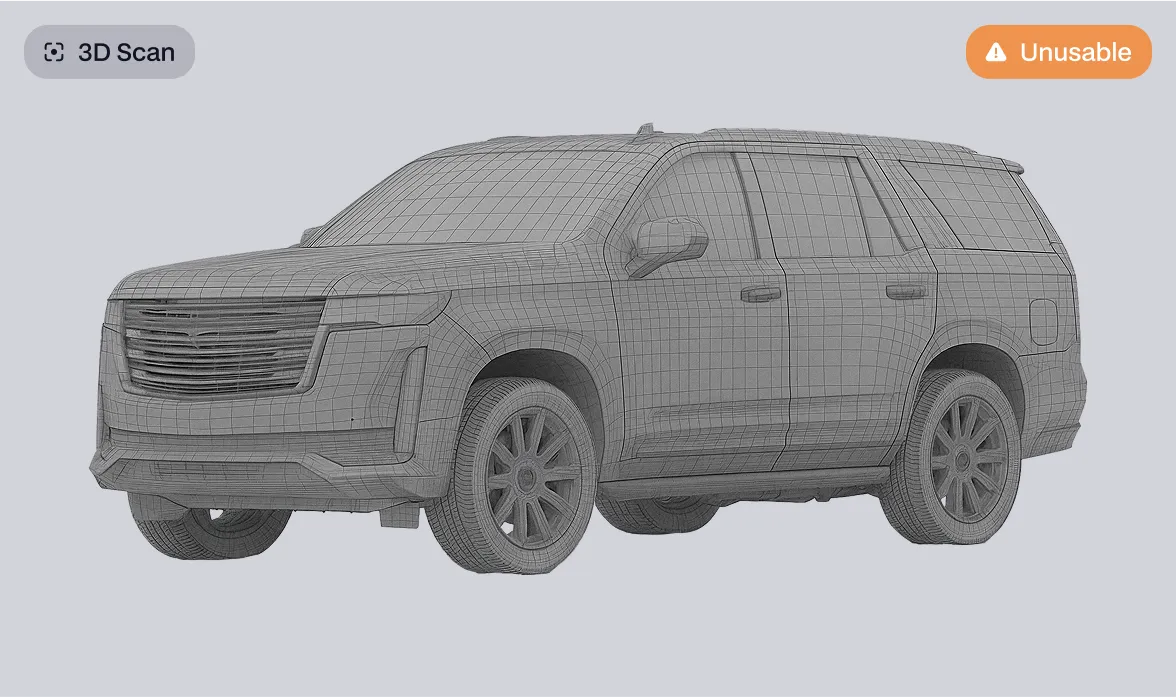

We ingest messy, unstructured inputs — from product catalogs, 3D scans, or raw design files — and turn them into clean, structured training data pipelines.

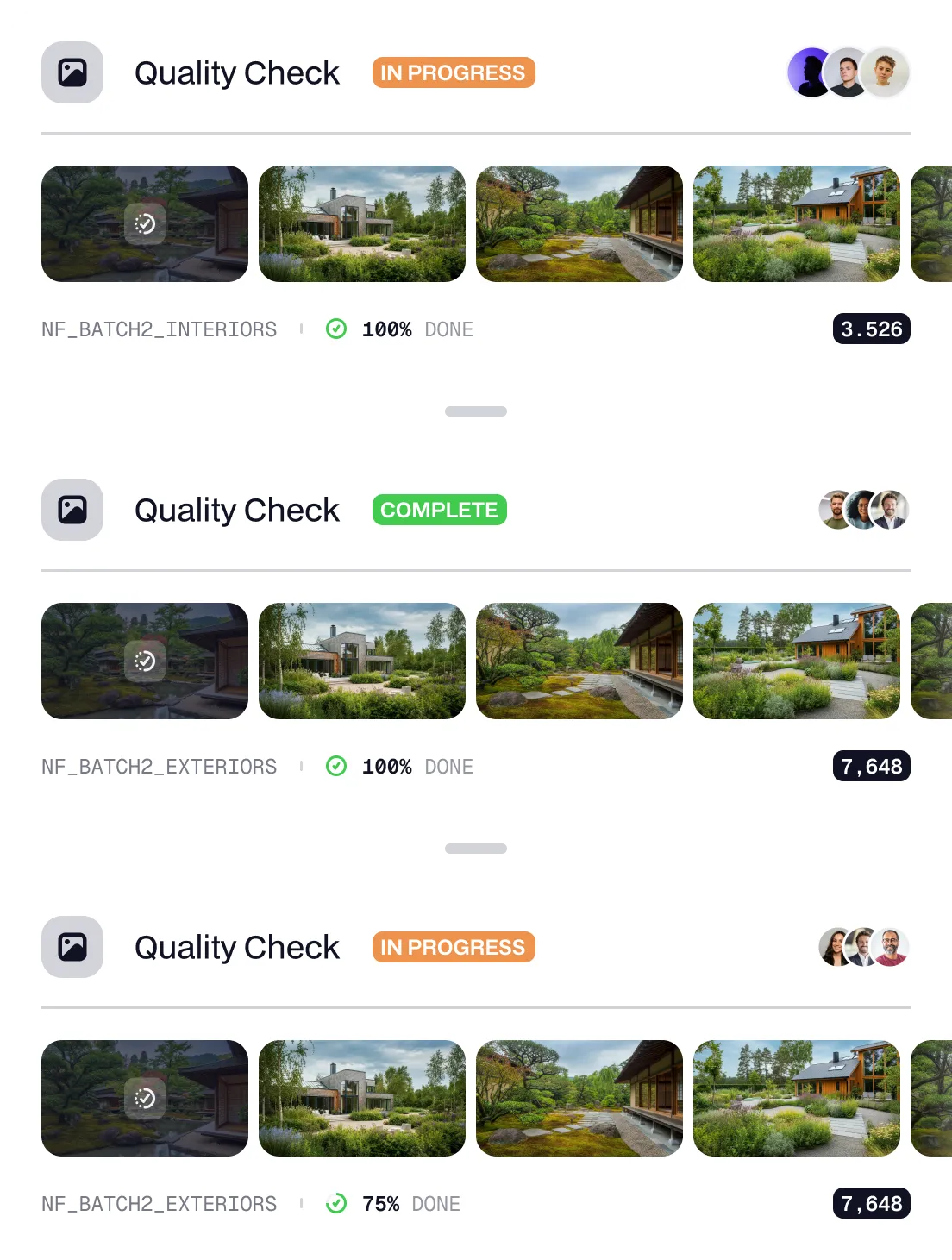

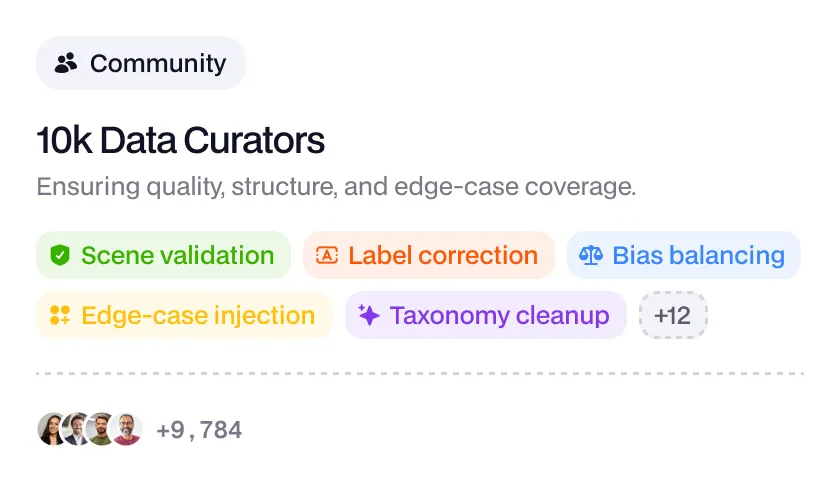

Verified by people, enhanced by AI.

All outputs pass through QA and refinement loops. We maintain precision via active human review — for critical use cases like robotics and safety systems.

Clean, labeled and simulation-ready.

Curated scenes are exported in formats ready for computer vision and robotics training — including RGB, depth, segmentation, bounding boxes, and metadata bindings.

Powering the Future of Embodied AI

Models don't just need more compute, they need more worlds.

We built the worlds.

Partnering with the most advanced technology companies in the world

Start generating the data your models actually need.

Fully annotated, multi-modal, and production-ready — at scale.